AI in Australian Government: Insights from the Frontline

How Australia’s public sector is adopting AI tools like Microsoft 365 Copilot - and what it means for skills, strategy, and service delivery

Artificial Intelligence (AI) is rapidly reshaping the way the public sector works. Nowhere is this more evident than in the AI in Australian government landscape, where agencies are testing and scaling tools like Microsoft 365 Copilot. The uptake of AI in government is part of a wider trend of AI in Australia, where organisations are balancing innovation with governance. This article brings together frontline observations, key data points from the APS whole-of-government Copilot trial, and a pragmatic roadmap for capability building - all tailored to government teams.

For evidence-based insights, we reference the Australian Government’s official Microsoft 365 Copilot Evaluation Report from the Digital Transformation Agency (DTA). For practical upskilling aligned to AI in Australian government adoption, see Nexacu’s Microsoft Copilot courses and AI for Business training designed for public sector teams.

Jump to: Key findings • Context & trial overview • Evaluation objectives & method • Frontline findings • Adoption challenges • Skills & training pathways • Risk, ethics & governance • 30-60-90 day roadmap • APS-style use cases • FAQs

Executive takeaways on AI in Australian Government

- • Meaningful gains in core tasks: summarisation, first-draft creation, and information search showed perceived efficiency and quality improvements.

- • Time reallocation to higher-value work: ~40% of participants reported shifting time to mentoring, stakeholder work, planning, and product improvement.

- • Training multiplies impact: confidence rose by 28 percentage points among those receiving 3+ forms of training.

- • Moderate overall usage: about one-third used Copilot daily; usage concentrated in Teams and Word.

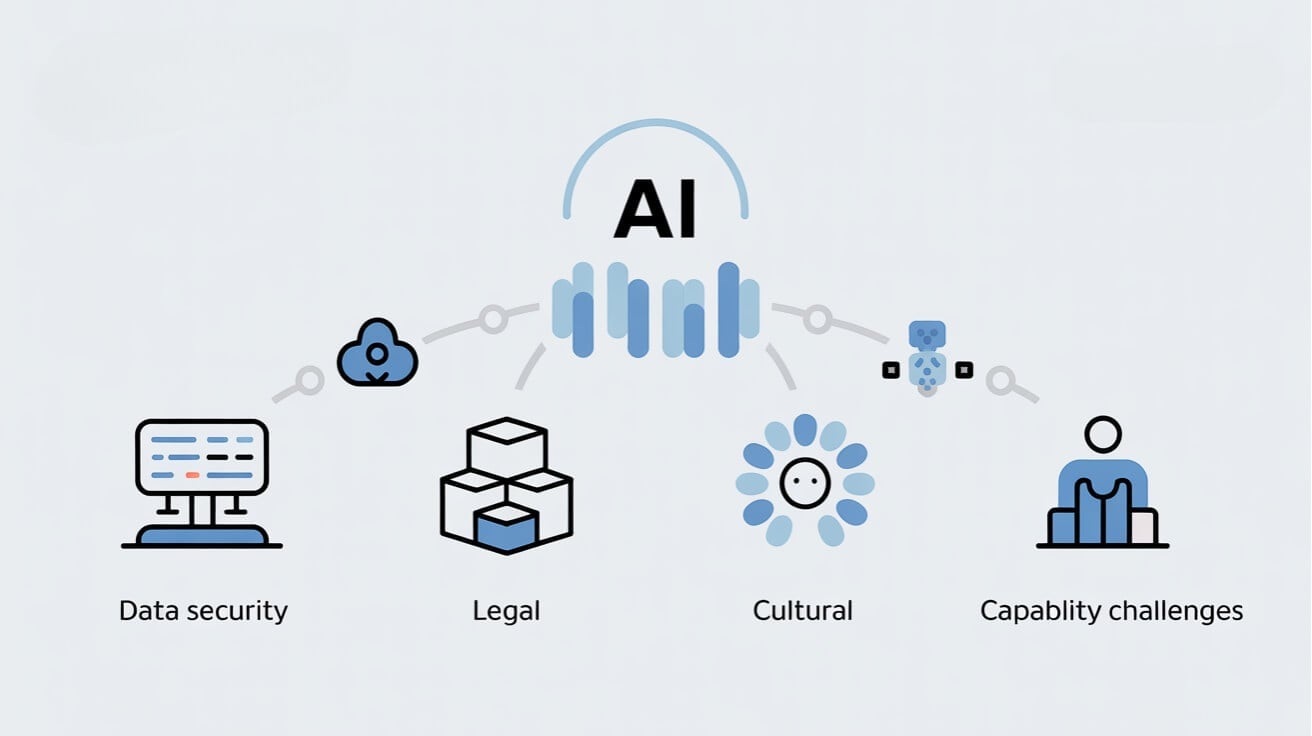

- • Adoption is a change program, not just a rollout: technical, capability, legal, cultural, and governance barriers must be actively managed.

Source: Microsoft 365 Copilot Evaluation Report (digital.gov.au)

Context: rapid adoption of AI in Australian government

Generative AI became widely accessible in just a few years, prompting the APS to enable safe, responsible experimentation so staff could explore productivity opportunities without compromising compliance. Microsoft 365 Copilot was chosen for a 6-month whole-of-government trial announced on 16 November 2023 because it integrates into existing Microsoft 365 apps and could be deployed swiftly under existing contracts. Between January and June 2024, agencies nominated participants to receive over 5,765 Copilot licences for everyday use in familiar applications - a key milestone in the broader story of AI in Australia.

This approach allowed APS teams to test the value of AI in government in real work contexts rather than artificial pilots. It also meant agencies could consider future opportunities and challenges with grounded evidence - not just vendor claims.

Evaluation objectives & method (high level)

The DTA engaged Nous Group to assist with the evaluation, with the Australian Centre of Evaluation consulted on methodology. The evaluation was guided by four objectives and associated key lines of enquiry:

- • Employee-related outcomes: effects on productivity, output quality, process improvement, and delivery on priorities - What are the perceived effects of Copilot on APS employees?

- • Productivity sentiment: staff perceptions of productivity benefits - What are the perceived productivity benefits of Copilot?

- • Whole-of-government adoption: extent to which generative AI can be implemented safely and responsibly - What are the short- and long-term adoption challenges?

- • Unintended outcomes: benefits, consequences, or risks emerging from Copilot use - What broader effects could impact the APS?

The trial was non-randomised and cross-agency, reflecting real-world conditions. While detailed methodology and limitations are in the report, this design provided practical insight for policy and delivery leaders weighing costs, benefits, and risks of generative AI in Australia’s public sector.

Frontline findings: what worked, where, and for whom

Where value showed up with AI in government

- • Summarisation: meeting notes, long email threads, document distillation.

- • First drafts: briefings, consultation summaries, policy scaffolds, content rewrites.

- • Information search: faster retrieval across M365 context (e.g., Teams, OneDrive, SharePoint) to orient work.

Participants estimated around an hour saved when performing one of these activities. Reported time savings were strongest for APS3–6 and EL1 staff, as well as ICT-related roles, where repetitive knowledge work is common.

Usage and satisfaction in AI in Australian government programs

- • Daily use: about one in three used Copilot daily.

- • App focus: usage concentrated in Teams and Word; Excel functionality and Outlook access issues hampered broader adoption.

- • Optimism: 77% optimistic at the end of the trial; 86% wished to continue using Copilot.

- • Positive sentiment: highest among SES (93%) and Corporate (81%) roles.

Productivity & quality outcomes for AI in Australia’s public sector

- • Speed: 69% agreed Copilot improved the speed of completing tasks.

- • Quality: 61% agreed Copilot improved work quality.

- • Manager perspective: ~65% observed team-level productivity uplift.

- • Reallocation: ~40% reallocated time to higher-value activities (mentoring, stakeholder engagement, strategic planning, product enhancement).

Caveat: up to 7% reported Copilot added time to activities, usually due to unpredictability or verification overhead when context was incomplete or prompts were weak.

Source: Microsoft 365 Copilot Evaluation Report (digital.gov.au)

Adoption barriers: technology, capability, culture, and governance

The trial shows that enabling AI in Australian government is a change program - not just a licence deployment. Agencies reported obstacles across several dimensions:

Technical

- • Integration challenges with non-M365 tools (e.g., accessibility and classification tools such as JAWS and Janusseal - integration was out of scope for the trial).

- • Information architecture and permissions: Copilot can amplify poor data hygiene and security settings.

Capability

- • Staff need tailored training with agency-specific scenarios, not generic demos.

- • Prompt engineering, context-setting, and data-aware retrieval are essential skills.

Legal & governance

- • Uncertainty around disclosure of AI use, accountability for outputs, FOI applicability, and meeting transcription consent.

- • 61% of managers could not confidently identify AI-generated outputs - transparency and labelling policy is required.

Culture

- • Stigma about “using AI to do the work”, discomfort with transcription and perceived pressure to consent in meetings.

- • Need for champions to showcase high-value use cases and normalise responsible usage.

References & resources: Digital Transformation Agency • OECD Digital Government • World Economic Forum on AI in the public sector • CSIRO AI Roadmap

Skills & training pathways for AI in Australian government teams

Training is the strongest predictor of confident use. The evaluation found that 75% of participants receiving three or more training formats were confident using Copilot - 28 percentage points higher than those who received a single format. In practice, agencies benefit from a blended approach: foundations for everyone, role-based scenarios for common tasks, and deep dives for champions.

Nexacu’s government-ready pathways align to this approach and support successful AI in government adoption:

- • Microsoft Copilot courses - practical workflows in Outlook, Word, Excel, PowerPoint, and Teams; prompt patterns; meeting summaries; content rewrites; responsible use.

- • AI for Business - strategy, governance, risk, and ROI for leaders and program owners; change management and capability uplift planning.

- • Power BI - data literacy, dashboards, and AI-assisted insight for policy and service design.

- • Professional Development - communication, stakeholder engagement, and leadership support for AI adoption.

A practical capability framework for AI adoption

- • Foundations for all: AI basics, privacy & security, FOI awareness, prompt hygiene, responsible disclosure.

- • Role-based skills: policy drafting, citizen comms, case work, project documentation, analytics orientation.

- • Champion cohort: advanced prompting, use case design, measurement, coaching peers, governance liaison.

- • Leadership: KPIs, risk appetite statements, investment cases, adaptive planning for rolling releases.

Risk, ethics & governance: adopting AI the public-purpose way

The evaluation surfaces longer-term risks that matter for government leadership:

- • Jobs & skills composition: potential displacement risks concentrated in administrative roles (with equity implications for women and junior staff).

- • Bias & culture: outputs may skew toward Western norms and could mishandle cultural data (e.g., First Nations words or imagery) without safeguards.

- • Skills atrophy: risk of over-reliance on AI for summarisation and drafting.

- • Vendor lock-in & environment: concerns about dependence on a single ecosystem and environmental impacts.

Mitigations include transparent policies on AI use, disclosure expectations, auditability of outputs, culturally safe content guidance, and prioritising accessibility. Agencies should also maintain options in procurement, invest in data hygiene, and align risk management with OECD Digital Government principles.

30-60-90 Day AI Adoption Roadmap

A phased plan to stand up safe, practical AI in Australian government capability - from guardrails to pilots to scale.

First 30 Days - Orientation & Guardrails

Stand up governance, tidy data, and launch foundation skills.

- • Form AI working group (IT, Records, Legal/FOI, Privacy, Security, Change).

- • Define risk appetite, disclosure wording, and transcription consent protocol.

- • Run a data hygiene sprint in SharePoint/OneDrive (permissions, metadata, retention).

- • Launch foundation training (Copilot basics, prompt hygiene, responsible use).

- • Choose 3–5 low-risk workflows to pilot (summaries, first drafts, info search).

Days 31–60 - Pilot & Measure

Operationalise a handful of workflows and prove value.

- • Publish approved prompts & “how we work” playbooks for each pilot.

- • Train champions to coach peers; schedule office hours.

- • Track baselines: time saved, rework rate, queue time, satisfaction.

- • Start label discipline: “AI-assisted using Copilot on <date>”.

- • Pair AI with analytics (narrative summaries for Power BI dashboards).

Days 61–90 - Scale & Embed

Expand to more teams and bake practices into BAU.

- • Extend successful pilots to adjacent functions; publish intranet case studies.

- • Update guardrails with Legal/FOI & Records based on pilot learnings.

- • Commission role-based deep dives (policy, comms, case management, procurement).

- • Set team KPIs: earlier decision points, time saved, quality indicators, citizen response times.

- • Plan next-wave use cases; add AI disclosure & accessibility checks to templates.

Evidence base: Microsoft 365 Copilot Evaluation Report (digital.gov.au)

APS-style use cases you can deploy now

Below are straightforward, low-risk use cases aligned to the strongest benefits in the evaluation - each is suitable for Teams/Word contexts where Copilot excels and supports scalable AI in Australian government adoption.

Policy & program

- • Summarise stakeholder roundtables and produce options papers with cited source links.

- • Draft briefings and Q&As for ministerial offices from existing SharePoint folders.

- • Generate literature scan outlines; humans verify and add sources.

Citizen communications

- • Draft response templates for repeat enquiries; personalise with case details.

- • Produce plain-language summaries and accessibility-first versions for diverse audiences.

Corporate services & governance

- • Summarise procurement standards or policy updates into digestible, action-oriented memos.

- • Draft committee papers from template repositories; flag missing sections for SMEs.

Data & analysis

- • Generate narrative summaries to accompany Power BI dashboards.

- • Produce “insights to action” lists from monthly reporting packs.

Practical guidance: guardrails, disclosure & records

Driving adoption requires practical, low-friction guidance staff can apply in seconds:

- • Approved prompts: publish vetted prompt patterns for common tasks; encourage reuse and iteration.

- • Disclosure: “This document was AI-assisted using Copilot on <date>.” Simple, consistent labelling builds trust.

- • Transcription consent: plain-language scripts, opt-in by default, alternatives for sensitive sessions.

- • Records & FOI: store prompts/outputs in the business record where they inform decisions; align with agency disposal schedules.

Explore Microsoft Copilot courses

Evidence you can cite on AI in Australia

- • Microsoft 365 Copilot Evaluation Report (digital.gov.au)

- • OECD - Digital Government

- • CSIRO - AI Roadmap (Australia)

- • World Economic Forum - Harnessing AI in government

- • Microsoft - Copilot overview

Related Nexacu courses for government teams

- • Microsoft Copilot for Microsoft 365 - Outlook, Word, Excel, PowerPoint, Teams; prompts, governance, and safe usage.

- • AI for Business - executive briefings, risk frameworks, adoption metrics, use-case design.

- • Power BI pathway - data models, governance, DAX, performance, and analytic storytelling.

- • Professional Development - communication, stakeholder engagement, leadership for change.

Frequently asked questions about AI in Australian government

How is AI in Australian government improving productivity?

The Copilot trial showed that AI in Australian government can streamline summarisation, drafting, and information search. 69% reported faster task completion and 61% reported quality improvements, allowing staff to focus on higher-value work like policy design and citizen engagement.

What about risks like FOI, bias, or transcription consent?

These are real and manageable with clear guardrails: simple disclosure/labelling patterns, accessible consent options for meetings, culturally safe content guidance, and records alignment. Agencies should involve Legal/FOI, Privacy, Security, and Records from day one.

Does training actually change outcomes in AI in Australia’s public sector?

Yes. Confidence increased by 28 percentage points for staff who received three or more training formats. We recommend blended pathways - foundations, role-based scenarios, and champion enablement via Copilot courses and AI for Business.

Will AI replace APS roles?

The report flags potential impacts on job composition, especially in administrative roles, with important equity considerations. The strategic response is reskilling, redesigning workflows, and ensuring that AI augments rather than replaces critical human judgement and community engagement.

Where should we start with AI in government?

Pick three low-risk, high-value workflows (e.g., meeting summaries, first drafts, information searches), publish approved prompts, and measure time saved. Build momentum with champions and share results across the department.

Conclusion: capability first, technology second

The APS Copilot trial shows that generative AI in Australian government can deliver tangible benefits - if agencies build skills, set clear guardrails, and manage culture. With adaptive planning and transparent governance, AI becomes a practical ally for better policy, faster services, and stronger outcomes for citizens across AI in Australia.

Read the Microsoft 365 Copilot Evaluation Report Explore Copilot Training

Notes: Statistics and findings cited from the Australian Government’s Microsoft 365 Copilot Evaluation Report. Agencies should review the full report for methodology, limitations, and contextual detail.